任何聊天完成 Mcp Mcp 服务器

概览

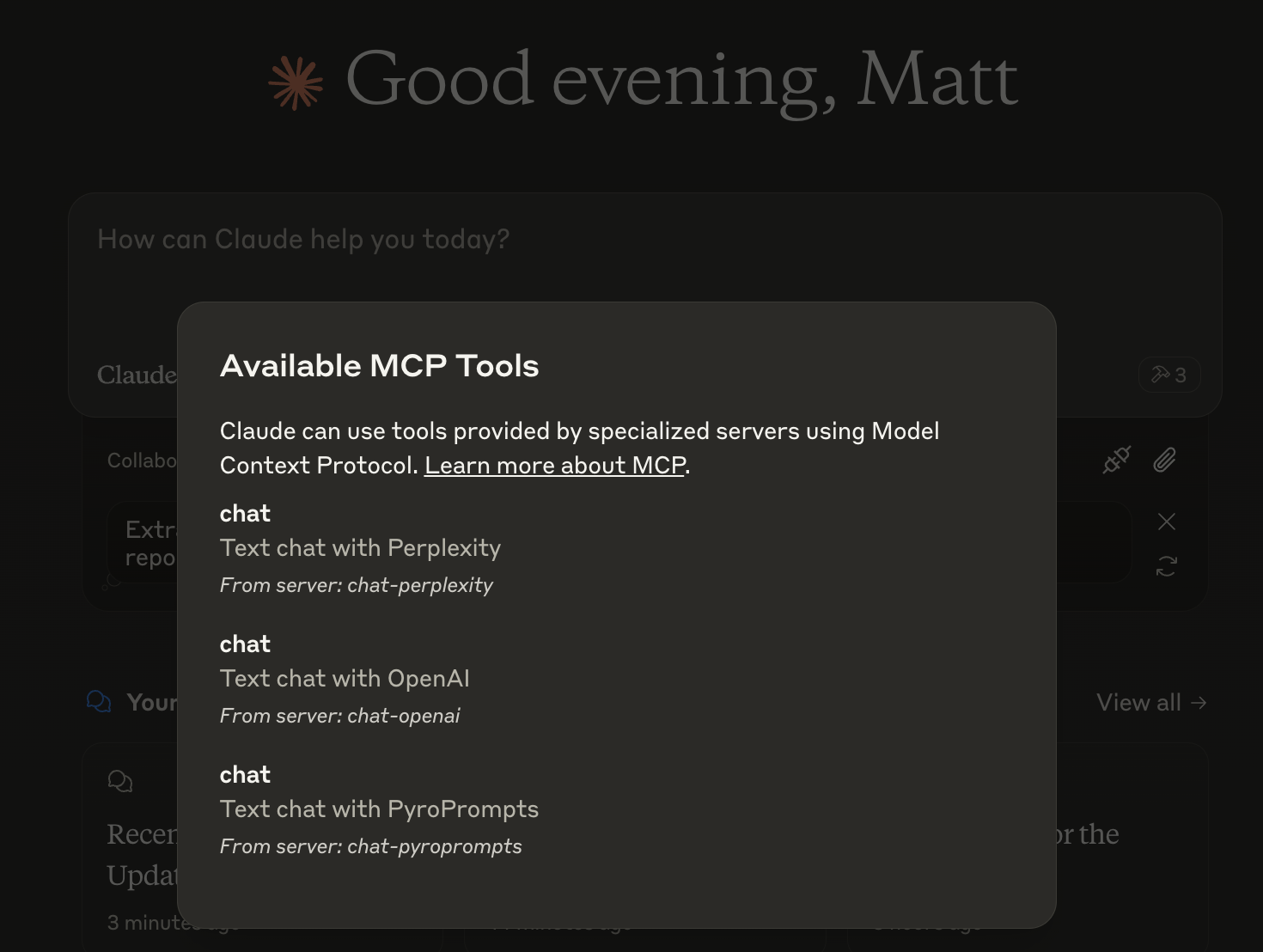

什么是 Any Chat Completions MCP?

Any Chat Completions MCP 是一个多功能服务器,旨在将任何大型语言模型(LLM)作为工具使用。这个创新平台允许开发者和用户将各种 LLM 集成到他们的应用程序中,从而增强聊天机器人和其他对话界面的功能。通过利用 LLM 的强大能力,用户可以创建更具吸引力和智能的互动,使其成为企业和开发者的宝贵资源。

Any Chat Completions MCP 的特点

- 多LLM支持:该服务器支持多个 LLM,允许用户选择最适合其特定需求的模型。

- 易于集成:通过简单的 API,开发者可以轻松将服务器集成到现有应用程序中。

- 可扩展性:架构设计能够处理大量请求,适合小型项目和大规模应用程序。

- 可定制:用户可以根据特定用例自定义 LLM 的行为,提升用户体验。

- 开源:作为一个开源项目,它鼓励社区贡献和透明性。

如何使用 Any Chat Completions MCP

- 安装:首先从 GitHub 克隆代码库并安装必要的依赖项。

- 配置:通过配置服务器设置来设置您的环境,以指定要使用的 LLM。

- API 集成:使用提供的 API 端点向服务器发送请求并接收来自 LLM 的响应。

- 测试:在开发环境中测试集成,以确保一切按预期工作。

- 部署:测试完成后,将服务器部署到生产环境中,并开始在应用程序中利用 LLM。

常见问题解答

问:Any Chat Completions MCP 的主要用例是什么?

答:主要用例是通过集成各种 LLM 来增强聊天机器人和对话界面,从而实现更智能和吸引人的互动。

问:Any Chat Completions MCP 是免费使用的吗?

答:是的,它是一个开源项目,这意味着它是免费使用和修改的。

问:我可以为该项目做贡献吗?

答:当然可以!欢迎贡献。您可以在 GitHub 代码库上提交问题、功能请求或拉取请求。

问:支持哪些编程语言?

答:该服务器设计为语言无关,但可以使用流行的编程语言如 Python、JavaScript 和 Java 轻松访问 API。

问:我该如何报告问题或错误?

答:您可以通过在 GitHub 代码库上创建问题来报告问题或错误,并提供您遇到的问题的详细信息。

通过利用 Any Chat Completions MCP,开发者可以显著增强其应用程序的对话能力,使其成为人工智能和机器学习领域的强大工具。

详情

Server配置

{

"mcpServers": {

"any-chat-completions-mcp": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"ghcr.io/metorial/mcp-container--pyroprompts--any-chat-completions-mcp--any-chat-completions-mcp",

"npm run start"

],

"env": {

"AI_CHAT_KEY": "ai-chat-key",

"AI_CHAT_NAME": "ai-chat-name",

"AI_CHAT_MODEL": "ai-chat-model",

"AI_CHAT_BASE_URL": "ai-chat-base-url"

}

}

}

}