任何聊天完成 Mcp Mcp 伺服器

概覽

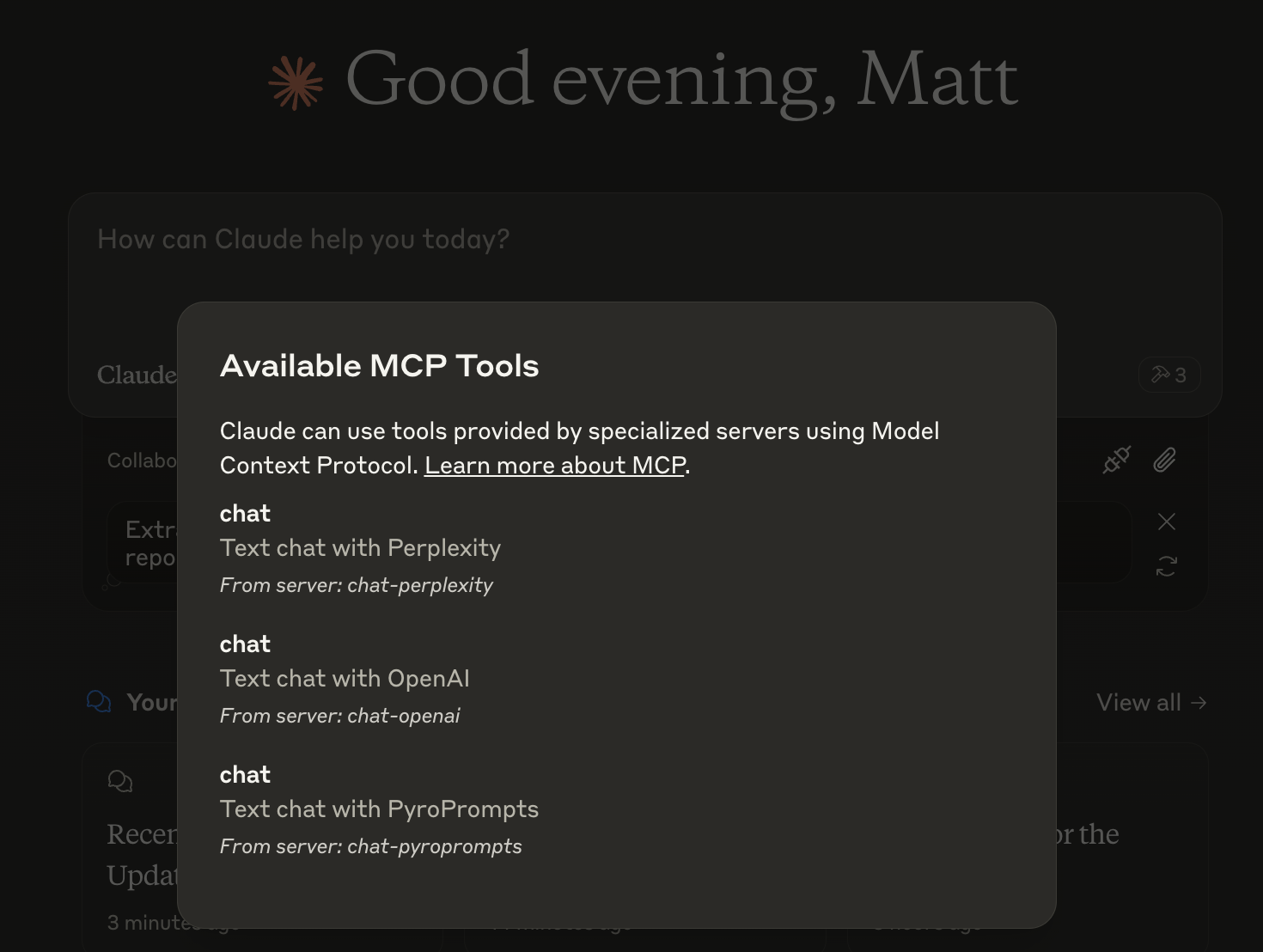

什麼是 Any Chat Completions MCP?

Any Chat Completions MCP 是一個多功能伺服器,旨在利用任何大型語言模型(LLM)作為工具。這個創新的平台允許開發者和用戶將各種 LLM 整合到他們的應用程式中,增強聊天機器人和其他對話介面的能力。通過利用 LLM 的強大功能,用戶可以創造更具吸引力和智能的互動,這使其成為企業和開發者的寶貴資源。

Any Chat Completions MCP 的特點

- 多 LLM 支援:伺服器支援多個 LLM,使用者可以根據特定需求選擇最佳模型。

- 易於整合:通過簡單的 API,開發者可以輕鬆將伺服器整合到現有的應用程式中。

- 可擴展性:架構設計能夠處理大量請求,適合小型專案和大型應用程式。

- 可自定義:用戶可以根據特定用例自定義 LLM 的行為,增強用戶體驗。

- 開源:作為一個開源專案,它鼓勵社區貢獻和透明度。

如何使用 Any Chat Completions MCP

- 安裝:首先從 GitHub 克隆存儲庫並安裝必要的依賴項。

- 配置:通過配置伺服器設置來設置您的環境,以指定要使用的 LLM。

- API 整合:使用提供的 API 端點向伺服器發送請求並接收 LLM 的回應。

- 測試:在開發環境中測試整合,以確保一切正常運行。

- 部署:測試完成後,將伺服器部署到生產環境中,開始在應用程式中利用 LLM。

常見問題

問:Any Chat Completions MCP 的主要用例是什麼?

答:主要用例是通過整合各種 LLM 來增強聊天機器人和對話介面,實現更智能和吸引人的互動。

問:Any Chat Completions MCP 是免費使用的嗎?

答:是的,它是一個開源專案,這意味著它是免費使用和修改的。

問:我可以為這個專案做貢獻嗎?

答:當然可以!歡迎貢獻。您可以在 GitHub 存儲庫上提交問題、功能請求或拉取請求。

問:支援哪些程式語言?

答:伺服器設計為語言無關,但可以使用 Python、JavaScript 和 Java 等流行程式語言輕鬆訪問 API。

問:我該如何報告問題或錯誤?

答:您可以通過在 GitHub 存儲庫上創建問題來報告問題或錯誤,並提供您遇到的問題的詳細信息。

通過利用 Any Chat Completions MCP,開發者可以顯著增強應用程式的對話能力,使其成為人工智慧和機器學習領域中的強大工具。

詳細

伺服器配置

{

"mcpServers": {

"any-chat-completions-mcp": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"ghcr.io/metorial/mcp-container--pyroprompts--any-chat-completions-mcp--any-chat-completions-mcp",

"npm run start"

],

"env": {

"AI_CHAT_KEY": "ai-chat-key",

"AI_CHAT_NAME": "ai-chat-name",

"AI_CHAT_MODEL": "ai-chat-model",

"AI_CHAT_BASE_URL": "ai-chat-base-url"

}

}

}

}